2016-01-08 08:12:22 +08:00

|

|

|

# Models and Accuracies

|

2016-01-13 04:46:49 +08:00

|

|

|

This page overviews different OpenFace neural network models

|

|

|

|

|

and is intended for advanced users.

|

|

|

|

|

|

|

|

|

|

# Model Definitions

|

|

|

|

|

|

2016-04-19 08:25:34 +08:00

|

|

|

The number of parameters are with 128-dimensional embeddings

|

|

|

|

|

and do not include the batch normalization running means and

|

|

|

|

|

variances.

|

2016-01-13 05:22:13 +08:00

|

|

|

|

|

|

|

|

| Model | Number of Parameters |

|

2016-01-13 04:46:49 +08:00

|

|

|

|---|---|

|

|

|

|

|

| [nn4.small2](https://github.com/cmusatyalab/openface/blob/master/models/openface/nn4.small2.def.lua) | 3733968 |

|

|

|

|

|

| [nn4.small1](https://github.com/cmusatyalab/openface/blob/master/models/openface/nn4.small1.def.lua) | 5579520 |

|

|

|

|

|

| [nn4](https://github.com/cmusatyalab/openface/blob/master/models/openface/nn4.def.lua) | 6959088 |

|

|

|

|

|

| [nn2](https://github.com/cmusatyalab/openface/blob/master/models/openface/nn2.def.lua) | 7472144 |

|

2016-01-08 07:28:05 +08:00

|

|

|

|

|

|

|

|

# Pre-trained Models

|

2016-01-13 04:46:49 +08:00

|

|

|

Models can be trained in different ways with different datasets.

|

2016-01-08 07:28:05 +08:00

|

|

|

Pre-trained models are versioned and should be released with

|

|

|

|

|

a corresponding model definition.

|

|

|

|

|

Switch between models with caution because the embeddings

|

|

|

|

|

not compatible with each other.

|

|

|

|

|

|

2016-01-13 04:46:49 +08:00

|

|

|

The current models are trained with a combination of the two largest

|

2016-01-08 07:28:05 +08:00

|

|

|

(of August 2015) publicly-available face recognition datasets based on names:

|

|

|

|

|

[FaceScrub](http://vintage.winklerbros.net/facescrub.html)

|

|

|

|

|

and [CASIA-WebFace](http://arxiv.org/abs/1411.7923).

|

|

|

|

|

|

2016-01-13 05:22:13 +08:00

|

|

|

The models can be downloaded from our storage servers:

|

2016-01-13 05:48:22 +08:00

|

|

|

|

2016-01-13 05:22:13 +08:00

|

|

|

+ [nn4.v1](http://openface-models.storage.cmusatyalab.org/nn4.v1.t7)

|

|

|

|

|

+ [nn4.v2](http://openface-models.storage.cmusatyalab.org/nn4.v2.t7)

|

|

|

|

|

+ [nn4.small1.v1](http://openface-models.storage.cmusatyalab.org/nn4.small1.v1.t7)

|

|

|

|

|

+ [nn4.small2.v1](http://openface-models.storage.cmusatyalab.org/nn4.small2.v1.t7)

|

|

|

|

|

|

2016-01-08 07:28:05 +08:00

|

|

|

API differences between the models are:

|

|

|

|

|

|

|

|

|

|

| Model | alignment `landmarkIndices` |

|

|

|

|

|

|---|---|

|

|

|

|

|

| nn4.v1 | `openface.AlignDlib.INNER_EYES_AND_BOTTOM_LIP` |

|

|

|

|

|

| nn4.v2 | `openface.AlignDlib.OUTER_EYES_AND_NOSE` |

|

2016-01-13 04:46:49 +08:00

|

|

|

| nn4.small1.v1 | `openface.AlignDlib.OUTER_EYES_AND_NOSE` |

|

|

|

|

|

| nn4.small2.v1 | `openface.AlignDlib.OUTER_EYES_AND_NOSE` |

|

2016-01-08 07:28:05 +08:00

|

|

|

|

2016-01-13 05:22:13 +08:00

|

|

|

## Performance

|

|

|

|

|

The performance is measured by averaging 500 forward passes with

|

|

|

|

|

[util/profile-network.lua](https://github.com/cmusatyalab/openface/blob/master/util/profile-network.lua)

|

2016-03-11 03:13:10 +08:00

|

|

|

and the following results use OpenBLAS on an 8 core 3.70 GHz CPU

|

2016-01-13 05:22:13 +08:00

|

|

|

and a Tesla K40 GPU.

|

|

|

|

|

|

|

|

|

|

| Model | Runtime (CPU) | Runtime (GPU) |

|

|

|

|

|

|---|---|---|

|

2016-03-11 03:13:10 +08:00

|

|

|

| nn4.v1 | 75.67 ms ± 19.97 ms | 21.96 ms ± 6.71 ms |

|

|

|

|

|

| nn4.v2 | 82.74 ms ± 19.96 ms | 20.82 ms ± 6.03 ms |

|

|

|

|

|

| nn4.small1.v1 | 69.58 ms ± 16.17 ms | 15.90 ms ± 5.18 ms |

|

|

|

|

|

| nn4.small2.v1 | 58.9 ms ± 15.36 ms | 13.72 ms ± 4.64 ms |

|

2016-01-13 05:22:13 +08:00

|

|

|

|

2016-01-08 07:28:05 +08:00

|

|

|

## Accuracy on the LFW Benchmark

|

|

|

|

|

|

2015-11-01 20:52:46 +08:00

|

|

|

Even though the public datasets we trained on have orders of magnitude less data

|

|

|

|

|

than private industry datasets, the accuracy is remarkably high

|

|

|

|

|

on the standard

|

|

|

|

|

[LFW](http://vis-www.cs.umass.edu/lfw/results.html)

|

|

|

|

|

benchmark.

|

|

|

|

|

We had to fallback to using the deep funneled versions for

|

2015-12-11 10:04:00 +08:00

|

|

|

58 of 13233 images because dlib failed to detect a face or landmarks.

|

2015-11-01 20:52:46 +08:00

|

|

|

|

2016-01-08 07:28:05 +08:00

|

|

|

| Model | Accuracy | AUC |

|

|

|

|

|

|---|---|---|

|

2016-01-13 06:58:30 +08:00

|

|

|

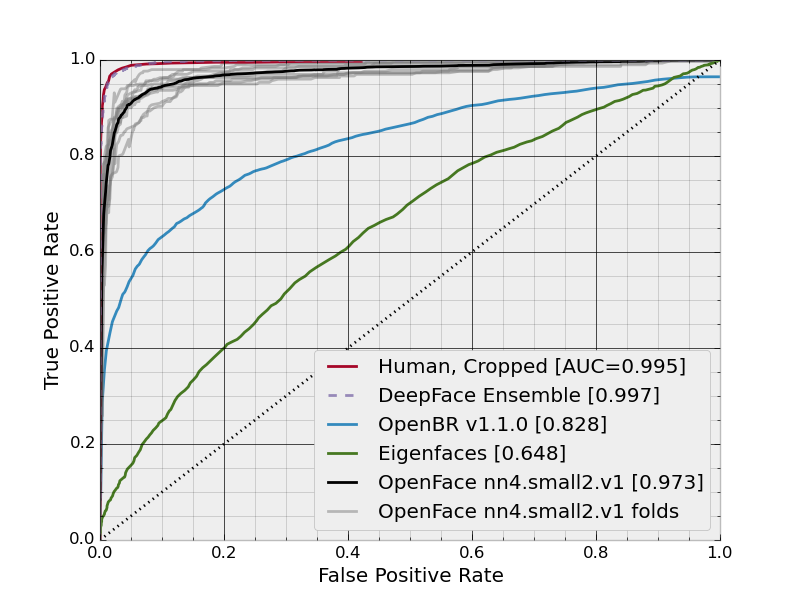

| **nn4.small2.v1** (Default) | 0.9292 ± 0.0134 | 0.973 |

|

|

|

|

|

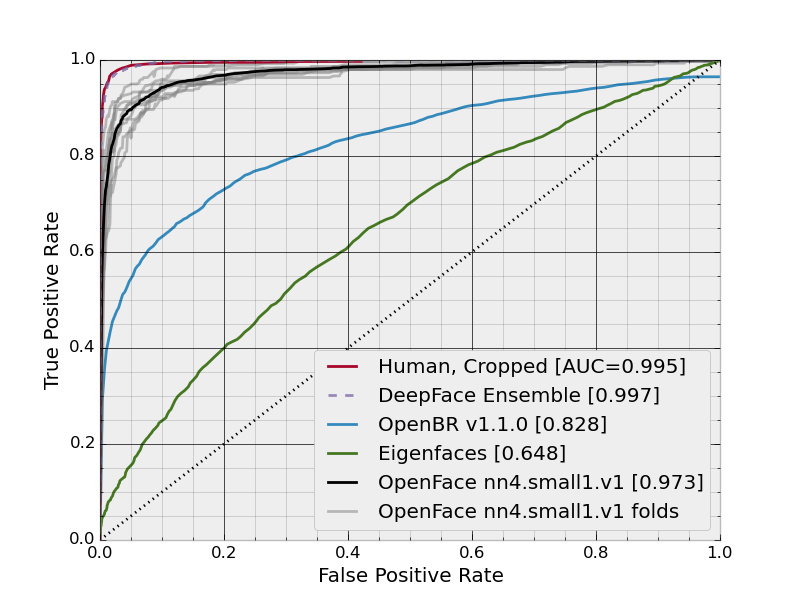

| nn4.small1.v1 | 0.9210 ± 0.0160 | 0.973 |

|

|

|

|

|

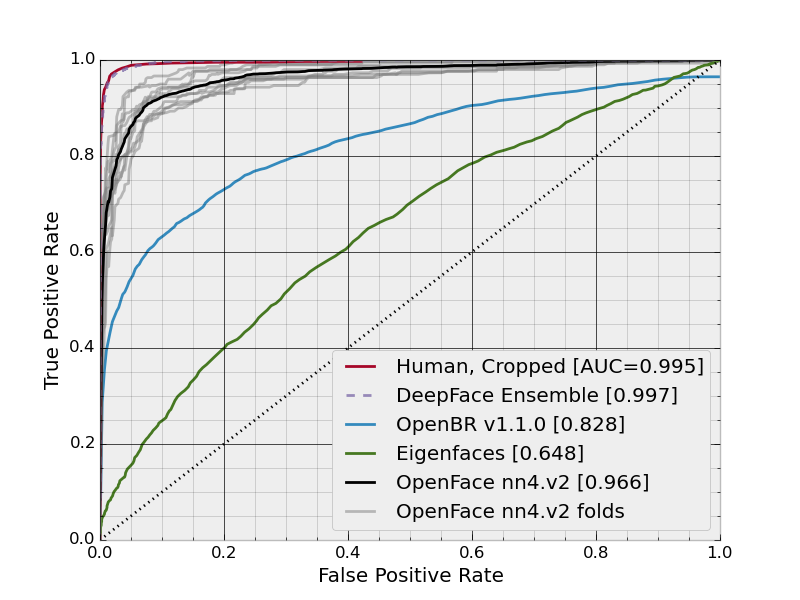

| nn4.v2 | 0.9157 ± 0.0152 | 0.966 |

|

|

|

|

|

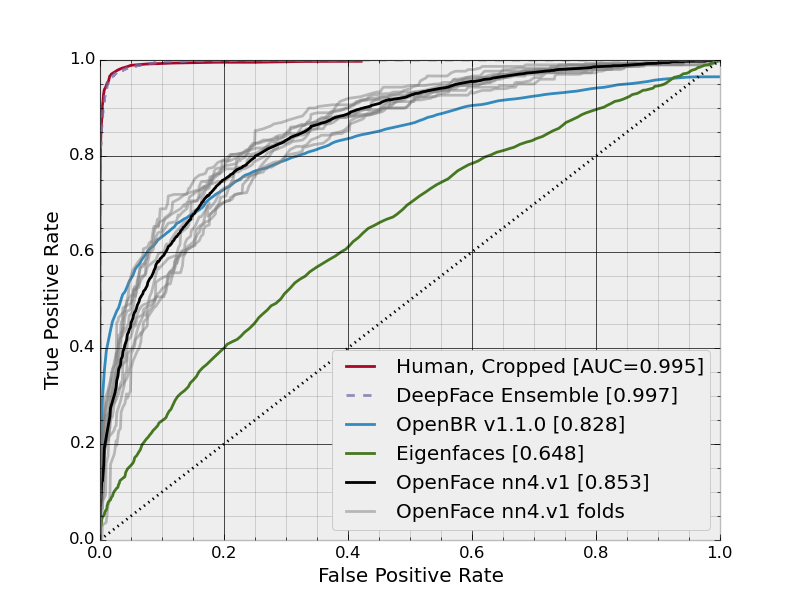

| nn4.v1 | 0.7612 ± 0.0189 | 0.853 |

|

2016-01-08 07:28:05 +08:00

|

|

|

| FaceNet Paper (Reference) | 0.9963 ± 0.009 | not provided |

|

|

|

|

|

|

2016-01-13 04:46:49 +08:00

|

|

|

### ROC Curves

|

|

|

|

|

|

|

|

|

|

#### nn4.small2.v1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

#### nn4.small1.v1

|

|

|

|

|

|

2016-01-08 07:28:05 +08:00

|

|

|

|

2016-01-13 04:46:49 +08:00

|

|

|

#### nn4.v2

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

#### nn4.v1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Running The LFW Experiment

|

2015-11-01 20:52:46 +08:00

|

|

|

|

|

|

|

|

This can be generated with the following commands from the root `openface`

|

|

|

|

|

directory, assuming you have downloaded and placed the raw and

|

2015-11-20 03:00:17 +08:00

|

|

|

[deep funneled](http://vis-www.cs.umass.edu/deep_funnel.html)

|

|

|

|

|

LFW data from [here](http://vis-www.cs.umass.edu/lfw/)

|

2015-11-01 20:52:46 +08:00

|

|

|

in `./data/lfw/raw` and `./data/lfw/deepfunneled`.

|

2016-01-14 21:35:46 +08:00

|

|

|

Also asve [pairs.txt](http://vis-www.cs.umass.edu/lfw/pairs.txt) in

|

|

|

|

|

`./data/lfw/pairs.txt`.

|

2015-11-01 20:52:46 +08:00

|

|

|

|

|

|

|

|

1. Install prerequisites as below.

|

|

|

|

|

2. Preprocess the raw `lfw` images, change `8` to however many

|

|

|

|

|

separate processes you want to run:

|

2016-01-13 21:36:16 +08:00

|

|

|

`for N in {1..8}; do ./util/align-dlib.py data/lfw/raw align outerEyesAndNose data/lfw/dlib-affine-sz:96 --size 96 & done`.

|

2015-11-01 20:52:46 +08:00

|

|

|

Fallback to deep funneled versions for images that dlib failed

|

|

|

|

|

to align:

|

2016-01-13 21:36:16 +08:00

|

|

|

`./util/align-dlib.py data/lfw/raw align outerEyesAndNose data/lfw/dlib-affine-sz:96 --size 96 --fallbackLfw data/lfw/deepfunneled`

|

2016-01-13 04:46:49 +08:00

|

|

|

3. Generate representations with `./batch-represent/main.lua -outDir evaluation/lfw.nn4.small2.v1.reps -model models/openface/nn4.small2.v1.t7 -data data/lfw/dlib-affine-sz:96`

|

2016-01-21 01:45:00 +08:00

|

|

|

4. Generate the ROC curve from the `evaluation` directory with `./lfw.py nn4.small2.v1 lfw.nn4.small2.v1.reps`.

|

2016-01-13 04:46:49 +08:00

|

|

|

This creates `roc.pdf` in the `lfw.nn4.small2.v1.reps` directory.

|

2015-11-01 20:52:46 +08:00

|

|

|

|

2016-01-08 07:28:05 +08:00

|

|

|

# Projects with Higher Accuracy

|

|

|

|

|

|

2015-11-01 20:52:46 +08:00

|

|

|

If you're interested in higher accuracy open source code, see:

|

|

|

|

|

|

2015-12-02 16:28:53 +08:00

|

|

|

## [Oxford's VGG Face Descriptor](http://www.robots.ox.ac.uk/~vgg/software/vgg_face/)

|

|

|

|

|

|

|

|

|

|

This is licensed for non-commercial research purposes.

|

|

|

|

|

They've released their softmax network, which obtains .9727 accuracy

|

|

|

|

|

on the LFW and will release their triplet network (0.9913 accuracy)

|

|

|

|

|

and data soon (?).

|

|

|

|

|

|

|

|

|

|

Their softmax model doesn't embed features like FaceNet,

|

|

|

|

|

which makes tasks like classification and clustering more difficult.

|

|

|

|

|

Their triplet model hasn't yet been released, but will provide

|

|

|

|

|

embeddings similar to FaceNet.

|

|

|

|

|

The triplet model will be supported by OpenFace once it's released.

|

|

|

|

|

|

2016-01-13 04:46:49 +08:00

|

|

|

## [Deep Face Representation](https://github.com/AlfredXiangWu/face_verification_experiment)

|

2015-12-02 16:28:53 +08:00

|

|

|

|

|

|

|

|

This uses Caffe and doesn't yet have a license.

|

|

|

|

|

The accuracy on the LFW is .9777.

|

|

|

|

|

This model doesn't embed features like FaceNet,

|

|

|

|

|

which makes tasks like classification and clustering more difficult.

|