|

|

||

|---|---|---|

| figures | ||

| modules | ||

| .gitignore | ||

| LICENSE.md | ||

| README.md | ||

| create_lmdb_dataset.py | ||

| dataset.py | ||

| model.py | ||

| test.py | ||

| train.py | ||

| utils.py | ||

README.md

What is wrong with scene text recognition model comparisons? dataset and model analysis

| paper | download training and evaluation data | download failure cases and cleansed label |

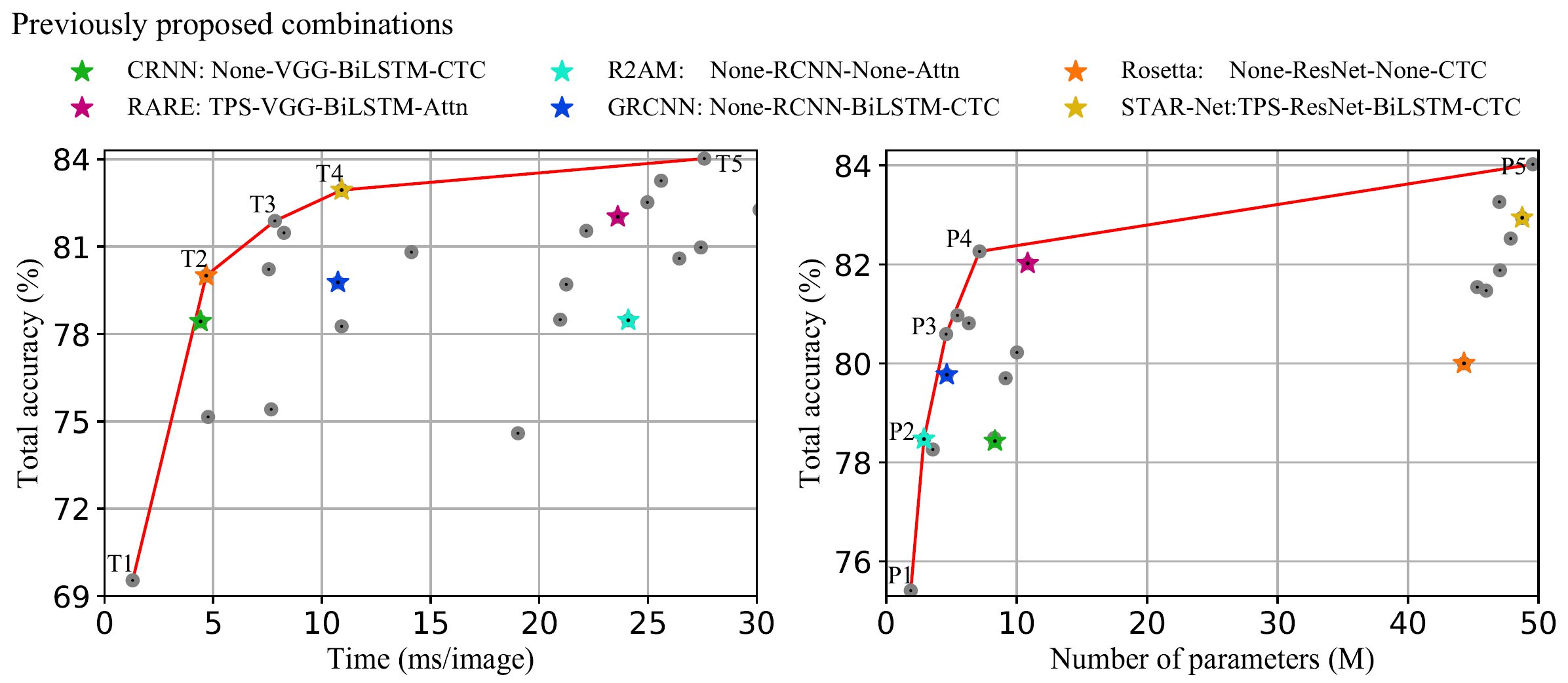

PyTorch implementation of our four-stage STR framework, that most existing STR models fit into.

Using this framework allows for the module-wise contributions to performance in terms of accuracy, speed, and memory demand, under one consistent set of training and evaluation datasets.

Such analyses clean up the hindrance on the current comparisons to understand the performance gain of the existing modules.

Getting Started

Dependency

- This work was tested with PyTorch 1.0.1, CUDA 9.0, python 3.6 and Ubuntu 16.04.

You may needpip3 install torch==1.0.1 - requirements : lmdb, pillow, torchvision, nltk, torch-baidu-ctc

pip3 install lmdb pillow torchvision nltk torch-baidu-ctc

Download lmdb dataset for traininig and evaluation from here

data_lmdb_release.zip contains below.

training datasets : MJSynth (MJ)[1] and SynthText (ST)[2]

validation datasets : the union of the training sets IC13[3], IC15[4], IIIT[5], and SVT[6].

evaluation datasets : benchmark evaluation datasets, consist of IIIT[5], SVT[6], IC03[7], IC13[3], IC15[4], SVTP[8], and CUTE[9].

Training and evaluation

- Train CRNN[10] model

CUDA_VISIBLE_DEVICES=0 python3 train.py \

--train_data data_lmdb_release/training --valid_data data_lmdb_release/validation \

--select_data MJ-ST --batch_ratio 0.5-0.5 \

--Transformation None --FeatureExtraction VGG --SequenceModeling BiLSTM --Prediction CTC

- Test CRNN[10] model

CUDA_VISIBLE_DEVICES=0 python3 test.py \

--eval_data data_lmdb_release/evaluation --benchmark_all_eval \

--Transformation None --FeatureExtraction VGG --SequenceModeling BiLSTM --Prediction CTC \

--saved_model saved_models/None-VGG-BiLSTM-CTC-Seed1111/best_accuracy.pth

- Try to train and test our best accuracy combination (TPS-ResNet-BiLSTM-Attn) also.

CUDA_VISIBLE_DEVICES=0 python3 train.py \

--train_data data_lmdb_release/training --valid_data data_lmdb_release/validation \

--select_data MJ-ST --batch_ratio 0.5-0.5 \

--Transformation TPS --FeatureExtraction ResNet --SequenceModeling BiLSTM --Prediction Attn

CUDA_VISIBLE_DEVICES=0 python3 test.py \

--eval_data data_lmdb_release/evaluation --benchmark_all_eval \

--Transformation TPS --FeatureExtraction ResNet --SequenceModeling BiLSTM --Prediction Attn \

--saved_model saved_models/TPS-ResNet-BiLSTM-Attn-Seed1111/best_accuracy.pth

Arguments

--train_data: folder path to training lmdb dataset.--valid_data: folder path to validation lmdb dataset.--eval_data: folder path to evaluation (with test.py) lmdb dataset.--select_data: select training data. default is MJ-ST, which means MJ and ST used as training data.--batch_ratio: assign ratio for each selected data in the batch. default is 0.5-0.5, which means 50% of the batch is filled with MJ and the other 50% of the batch is filled ST.--Transformation: select Transformation module [None | TPS].--FeatureExtraction: select FeatureExtraction module [VGG | RCNN | ResNet].--SequenceModeling: select SequenceModeling module [None | BiLSTM].--Prediction: select Prediction module [CTC | Attn].--saved_model: assign saved model to evaluation.--benchmark_all_eval: evaluate with 10 evaluation dataset versions, same with Table 1 in our paper.

Download failure cases and cleansed label from here

image_release.zip contains failure case images and benchmark evaluation images with cleansed label.

When you need to create lmdb dataset

pip3 install fire

python3 create_lmdb_dataset.py --inputPath data/ --gtFile data/gt.txt --outputPath result/

At this time, gt.txt should be {imagepath}\t{label}\n

For example

test/word_1.png Tiredness

test/word_2.png kills

test/word_3.png A

...

Acknowledgements

This implementation has been based on these repository crnn.pytorch, ocr_attention.

Reference

[1] M. Jaderberg, K. Simonyan, A. Vedaldi, and A. Zisserman. Synthetic data and artificial neural networks for natural scenetext recognition. In Workshop on Deep Learning, NIPS, 2014.

[2] A. Gupta, A. Vedaldi, and A. Zisserman. Synthetic data fortext localisation in natural images. In CVPR, 2016.

[3] D. Karatzas, F. Shafait, S. Uchida, M. Iwamura, L. G. i Big-orda, S. R. Mestre, J. Mas, D. F. Mota, J. A. Almazan, andL. P. De Las Heras. ICDAR 2013 robust reading competition. In ICDAR, pages 1484–1493, 2013.

[4] D. Karatzas, L. Gomez-Bigorda, A. Nicolaou, S. Ghosh, A. Bagdanov, M. Iwamura, J. Matas, L. Neumann, V. R.Chandrasekhar, S. Lu, et al. ICDAR 2015 competition on ro-bust reading. In ICDAR, pages 1156–1160, 2015.

[5] A. Mishra, K. Alahari, and C. Jawahar. Scene text recognition using higher order language priors. In BMVC, 2012.

[6] K. Wang, B. Babenko, and S. Belongie. End-to-end scenetext recognition. In ICCV, pages 1457–1464, 2011.

[7] S. M. Lucas, A. Panaretos, L. Sosa, A. Tang, S. Wong, andR. Young. ICDAR 2003 robust reading competitions. In ICDAR, pages 682–687, 2003.

[8] T. Q. Phan, P. Shivakumara, S. Tian, and C. L. Tan. Recognizing text with perspective distortion in natural scenes. In ICCV, pages 569–576, 2013.

[9] A. Risnumawan, P. Shivakumara, C. S. Chan, and C. L. Tan. A robust arbitrary text detection system for natural scene images. In ESWA, volume 41, pages 8027–8048, 2014.

[10] B. Shi, X. Bai, and C. Yao. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. In TPAMI, volume 39, pages2298–2304. 2017.

Citation

If you find this work useful for your research, please cite:

@article{baek2019STRcomparisons,

title={What is wrong with scene text recognition model comparisons? dataset and model analysis},

author={Baek, Jeonghun and Kim, Geewook and Lee, Junyeop and Park, Sungrae and Han, Dongyoon and Yun, Sangdoo and Oh, Seong Joon and Lee, Hwalsuk},

journal={arXiv preprint arXiv:1904.01906},

year={2019}

}

Contact

Feel free to contact me if there is any question (Jeonghun Baek jh.baek@navercorp.com).

License

Copyright (c) 2019-present NAVER Corp.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.